Fear, the class keyword, you must not!

Yet another TypeScript memory optimization story

— 6 minSo, my TypeScript patch that I wrote about previously was recently merged. This gave me quite some motivation to start up the profiler again, and look for some more wins.

Being able to memory profile JS can be considered a dark art sometimes, as even the TS team recognized in their current half-year roadmap:

much of what we discovered was also that profiling memory allocations in JavaScript applications is really hard. Infrastructure seems lacking in this area (e.g. figuring out what is being allocated too much), and much of our work may reside in learning about more tools

So I want to take this chance to highlight how I discovered another easy ~2% win just by looking at the memory profile and squinting really hard.

# Assumptions

Most memory optimizations I did were the result of an informed guess, based on some assumptions. Followed by experiments to see if my guess was right or not.

That being said, these things are not an exact science, and they might work very differently on all the different js engines. But I will be focusing on node/v8 here, since I would guess that is the >95% case of how typescript is used.

The main thing that I focus on here is a few things that I learned by watching recorded talks about v8 performance:

- v8 can inline properties of objects

- inlined properties are good for performance, and also memory usage

- v8 learns what to inline by observing your code

- adding the same properties, with the same types in the same order, as early as possible is good

- slapping on random properties in random order is bad

- v8 will try to group objects together based on their constructor function

# Analysing a Memory Profile

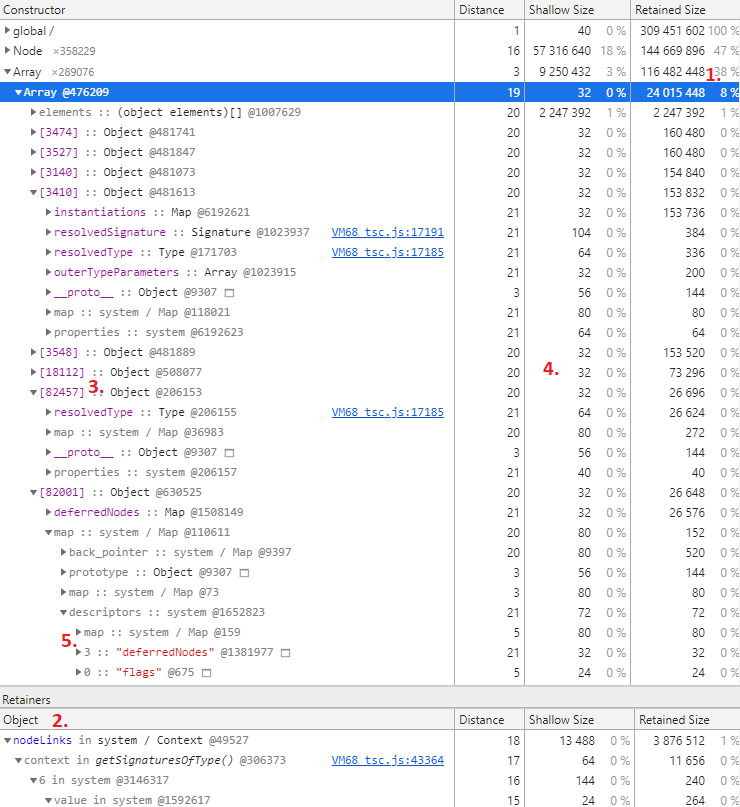

So I just opened up a memory profile and started looking for anything that popped into my eyes. Here is what I saw:

- There is a really large Array, which has a retained size of 24M, which means garbage collecting it would transitively free up 8% of the memory usage (?)

- That Array is assigned to a variable called

nodeLinkssomewhere - Its elements are all anonymous

Objects, so they don’t have a dedicated constructor. - Each one of those is 32 bytes, which is

4 * 8, so apart from the v8 internal special propertiesmap,propertiesandelements, it has just one inlined property. - Expanding

map.descriptorsshows us the property descriptors. I didn’t know that one before. We can also see that different objects have different properties on them.

Now comes a wild speculation on my part, which turned out to be correct in the end: What if, instead of having anonymous objects, we actually create a dedicated constructor function? Will that help v8 to make better inlining decisions?

# Optimizing the Code

So I started looking at the TS codebase, searching for this nodeLinks variable.

The variable comes from checker.ts, which is too large for github to

display so I can’t permalink it, but here it is:

// definition:

const nodeLinks: NodeLinks[] = [];

// usage:

function getNodeLinks(node: Node): NodeLinks {

const nodeId = getNodeId(node);

return nodeLinks[nodeId] || (nodeLinks[nodeId] = { flags: 0 } as NodeLinks);

}

You might also want to look at the definition of

NodeLinks.

The NodeLinks interface defines 26 properties, of which only flags and

jsxFlags are mandatory, and btw jsxFlags is not defined in the the

getNodeLinks function, so there is null-unsafety right there!

Anyway, lets create a quick constructor function for all the NodeLinks, call

it with new and see if it helped v8 better optimize the object allocations.

# Verifying the Optimization

Since optimizing these cases is actually a lot of guesswork and shots in the dark, it is very important to also check what the results of the code changes are. So lets take a look:

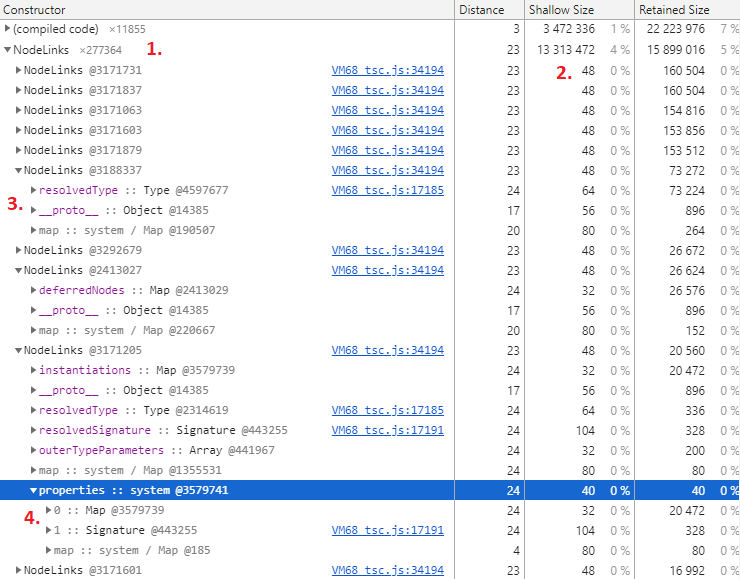

- We now have a new group for all the

NodeLinks, and we have ~277k of those, quite a lot actually. - Each one of them is 48 bytes, which is

6 * 8, so we can have 3 inlined properties. On a side note, the wholenodeLinksArray now has a retained size of 17M, vs the 24M from before. - Some smaller variants use only inline properties. BTW, the

flagsprop is not listed here, I think because it is an integer type, and not a pointer type. - Some larger variants still have external

propertieswhich are bad for both performance and memory usage.

So from this quick look we can see that we were successful. The memory usage

has dropped by a bit, and we how have all the NodeLinks grouped together,

and at least half of them have all of their properties inlined, without the

need for an external properties hashtable.

# The TypeScript Codebase

However, just by looking at the definition of NodeLinks, and at the memory

profile, we can clearly see that there is probably no object that has all the

26 properties it could potentially have, but that they could most likely be

grouped into separate types.

I do see this general code pattern a lot in the TS codebase, the Node

interface that I was previously looking at was another case.

The TS codebase defines a very broad interface type, with tons of optional

properties. Then is uses explicit and apparently very unsafe casts all around

the codebase, and adds properties some time later, in a probably arbitrary

order. Exactly the things you should not do, both from a v8 optimization

standpoint, and a general type-safety standpoint.

Well there is probably a reason for it. The TypeScript codebase is ancient.

I think it has been self-hosting since the very beginning? but it only had

proper union types and strictNullChecks since 2.0, and

strictPropertyInitialization since 2.7. Also, engines did not have native

class support back then.

So from my perspective, there is quite some technical debt to be paid! I would

very much suggest to embrace union types of native classes with initialized

properties in the codebase.

Or as Yoda would say:

Fear, the class keyword, you must not!

In my opinion, it would help engines to better optimize the code, it would help type safety, and it would also help onboarding new contributors.

Well so long, hope this has helped some people better understand the memory optimization tools, and how to approach such problems.