Rust `thread_local!`s are surprisingly expensive

— 3 minThese last couple of weeks, I have been obsessing over “the cost of observability”, specifically metrics. This whole topic is quite big and rather something for a conference, literally, as I submitted it as a talk for RustFest this year ;-)

But on this topic, I was experimenting with doing thread-local aggregation of metrics, and doing a ton of profiling trying to micro-optimize the heck out of it.

To do this thread-local aggregation, I am pulling in the amazing thread_local crate,

which I have since also contributed to \o/.

The crate allows you to carry around an arbitrary container of data in your structs, which internally

manages a concurrent list which is indexed into using a truely thread-local index.

The most awesome thing about this crate is that it also allows to iterate over all the thread local values if you want to. This makes it perfect for thread-local aggregation which is then aggregated once every N seconds across the whole process.

Though this also comes at one disadvantage because you have to wrap your data in a thread-safe Mutex if you want to modify it.

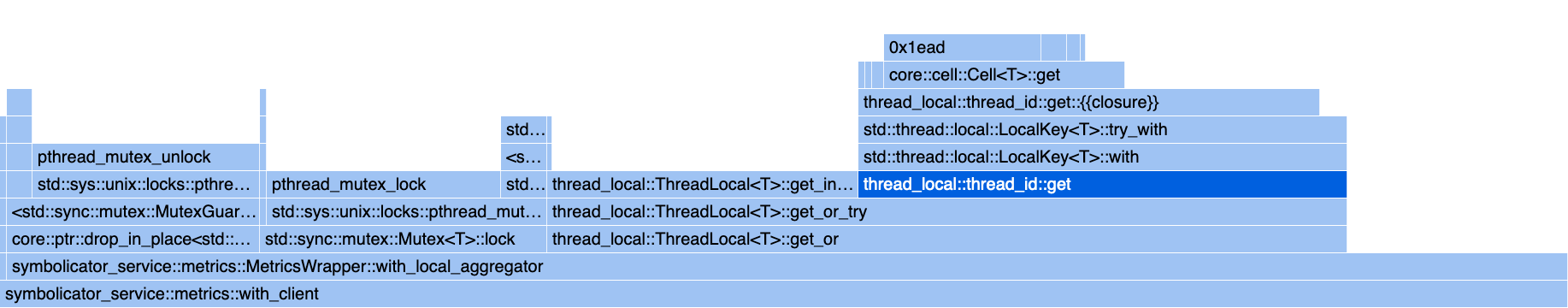

As I was then profiling the implementation, I was really shocked by what I saw. Here is a flamegraph focussed on the main point I want to make:

What we see here is two things:

First, an uncontended Mutex which is only used by a single thread (for 99.9% of the time) is quite fast.

As another note: I created this profile on macOS (yeah, I know) which, as you see, is still using pthread_mutex under the hood.

macOS will soon catch up to other OSs which already have a custom Mutex implementation, so things should get even faster still.

But more shockingly, the “true” Rust thread_local! is surprisingly slow. This is literally a Cell<Option<Thread>> in this case,

where Thread is just a bunch of usizes that are used to access the desired data in the concurrent list.

It is just 40 bytes on x64. So how can this Copy be this slow?

As another fun fact to put things into perspective: The very slim line on the left of the flamegraph that you can barely make out is a

OnceLock::get(), which involves an atomic read.

Or maybe this atomic is actually what makes the thread_local! slow? Who knows.

I pretty much just wanted to share this surprising outcome. This is also not really news.

@matklad has already blogged about this years ago.

Also NOTE that a nightly-only #[thread_local] attribute exists as well, which the thread_local crate will use when the nightly feature is enabled.

I haven’t tested how much faster that would be, if at all.

There is also an initiative underway in the Rust compiler to improve thread_local! implementation details..

This is pretty much all I wanted to share, as this is also not the first time that I see thread_local! being slow.

As there is an ongoing initiative to clean it up, plus the nightly-only #[thread_local] looming on the horizon,

I am quite hopeful that things will improve sometime in the future though.